Vampire Weekend's Surprising Jewish Stories

Vampire Weekend's Surprising Jewish Stories

6 min read

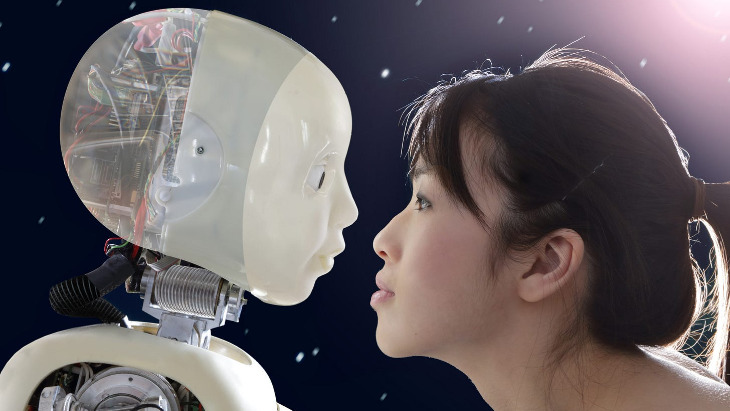

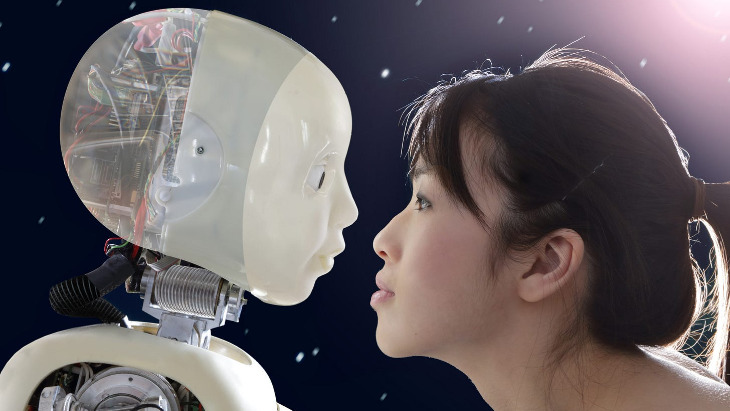

Can a machine ever be human?

Science-fiction stories often serve as guides to people’s hopes and fears for the future.

This is especially true regarding the idea of robots who have human-like intelligence, appearance, and personalities.

Usually, predictions for the arrival of these robots have been amusingly wide of the mark. The 1999 Robin Williams film Bicentennial Man envisages a brave new world of domestic robot assistants coming in…2005. Even Blade Runner, a sci-fi masterpiece, drastically overestimated when robots would live amongst us—suggesting that by 2019 androids known as ‘replicants’ would be widespread and almost indistinguishable from humans.

But even if the when of Blade Runner’s future is outdated, the what is still relevant. This is because the rapid development of artificial intelligence and robotics over the last decade means that we may well be heading toward a world where human-like robots live in our houses and serve us in shops.

According to Goldman Sachs, this could happen as soon as 2030. Frankly, this again looks wildly optimistic—or pessimistic, depending on your point of view.

Regardless, it’s worth thinking about right now because the production of genuinely humanoid robots poses huge social and philosophical problems. And perhaps the most ethically pressing of these is whether robots could have moral rights—rights that are equivalent, even to yours or mine.

The idea of robots with moral rights might sound ludicrous. After all, we wouldn’t think even for a moment that our microwaves or motorbikes could have rights. What would they even have a right to? Regular maintenance?

It’s an idea worth taking seriously, though, as people are likely to identify with robots that are human-like in appearance and behavior. This could then be the first step to recognizing them as equals.

How so? Right now, some robots are good at physical tasks, while certain AI systems are very good at replicating—or even exceeding—human intelligence within specific domains.

And engineers are continually trying to get AI closer to normal human behavior. In December, a group called Open AI launched ChatGPT, a website allowing members of the public to have a ‘conversation’ with an AI. Chat GPT is supposed to answer questions in a natural way—but to be honest, right now, it ‘talks’ like someone who learned English by reading an encyclopedia. When I asked ChatGPT what I should buy my wife for Christmas (don’t worry, I had already bought her something!), it told me:

“It’s always a good idea to consider your partner’s interests and preferences when choosing a gift. […] If your partner is an avid reader, consider giving them a book or a subscription to a magazine that matches their interests. This can be a thoughtful and personal gift that will be enjoyed for months to come.”

Not bad advice, certainly. But nor is it, in terms of tone and emotional intelligence, a natural response.

It doesn’t take much, though, to imagine an AI that becomes a little more conversationally fluent. Now imagine such an AI housed in a robotic body: it wouldn’t even have to look exactly like a human as long as it appeared to us as expressive, feeling, and perceptive of the world around it.

Such a robot might well be produced within our lifetimes and become part of our everyday life: helping us, talking to us, and caring for us when we’re ill or elderly. Would we be able to say that a robot like that doesn’t deserve equal rights to life, liberty, and the pursuit of happiness—particularly if it actively demanded those things of us?

This question can only be answered by first looking at the generally-accepted criteria for having moral rights.

Most hold that the criteria for having rights are whether an individual can be harmed.

On one traditional theory having rights is a reciprocal matter: only individuals with the capacity to respect the rights of others are endowed with moral rights of their own. This amounts to saying that only human beings have moral rights since animals aren’t capable of understanding what rights are or what the obligation to respect them is. (Nor can infants and toddlers, of course, but they grow into children who are able to do so, and for that reason, get a free pass.)

More modern theories tend to be less strict, however. Most hold that the criteria for having rights are whether an individual can be harmed. This is usually understood in terms of suffering, meaning that animals and humans are recognized as having at least some moral rights.

So what about a robot that could fluently hold a conversation, behave in a human-like way, and even assert its humanity? If they lived amongst us as part of our families and communities, would it be a great injustice, as Blade Runner suggests, to morally treat them as mere things rather than our equals?

The answer ultimately depends on whether you think their behavior and personalities are real or not.

The great mathematician and engineer Alan Turing argued that, yes, such a robot would count as a person. According to the Turing Test, the criterion for whether an AI is effectively the same as a human person is simply whether we’re incapable of distinguishing between them in the course of a conversation.

ChatGPT isn’t there yet – and not only because it exists on the internet rather than in a lifelike body. But there might be a robot like that before too long. And according to Turing’s line of thinking, a robot that did pass the test would then be owed just the same rights to life and liberty as you or I.

This means a world of Robo-citizens awaits us—if not in 2030, as Goldman Sachs says, then surely in this century.

For many people, however—myself included—something doesn’t feel right about this conclusion.

After all: what a thing looks like isn’t always an accurate guide to what it is. Fool’s gold is not actually gold. The Amazon Alexa home assistant isn’t actually a person, despite what my four-year-old son thinks. And nor would a robot that passed the Turing Test for adults actually be a person.

The American philosopher John Searle made this point well in his Chinese Room thought experiment. According to Searle, someone can answer questions in Mandarin perfectly yet without any understanding of what they’re saying, provided the instructions for how to respond are reliable enough. And this is essentially what ChatGPT does: throw back bits of text it doesn’t understand according to clear instructions.

Even if a robot becomes practically indistinguishable in its conversation and behavior from a human being, they would still be a machine and therefore possess no moral rights.

Even if a robot becomes practically indistinguishable in its conversation and behavior from a human being, this would remain the case. They might be quantitatively more advanced than ChatPGT, but they would remain qualitatively the same. They would, in other words, still be a machine rather than a person – and therefore possess no moral rights.

So if and when these robots are developed, we shouldn’t be misled by their convincing appearance. At the climax of Blade Runner, the dying replicant Roy Beatty says that his memories are about to be lost like tears in the rain. He might well be right: but it’s computer memory—RAM—and not the memories belonging to a true person.

For more content like this, please visit www.beyondbelief.blog